In this article, our focus will be on the API rate limiter—a crucial element within an API Gateway. To put it simply, a rate limiter restricts the number of API invocations per time unit for individual users, IPs, or APIs. Its role is to prevent abuse, promote fair usage, and safeguard the server against potential overloads stemming from either malicious or unintentional excessive requests.

Algorithms

There are multiple algorithms available for rate limiter. Here is a small list of algorithms widely used.

Leaky Bucket Algorithm

Token Bucket Algorithm

Fixed window counter

Sliding window log

Exponential backoff

Weighted distributed rate limiting

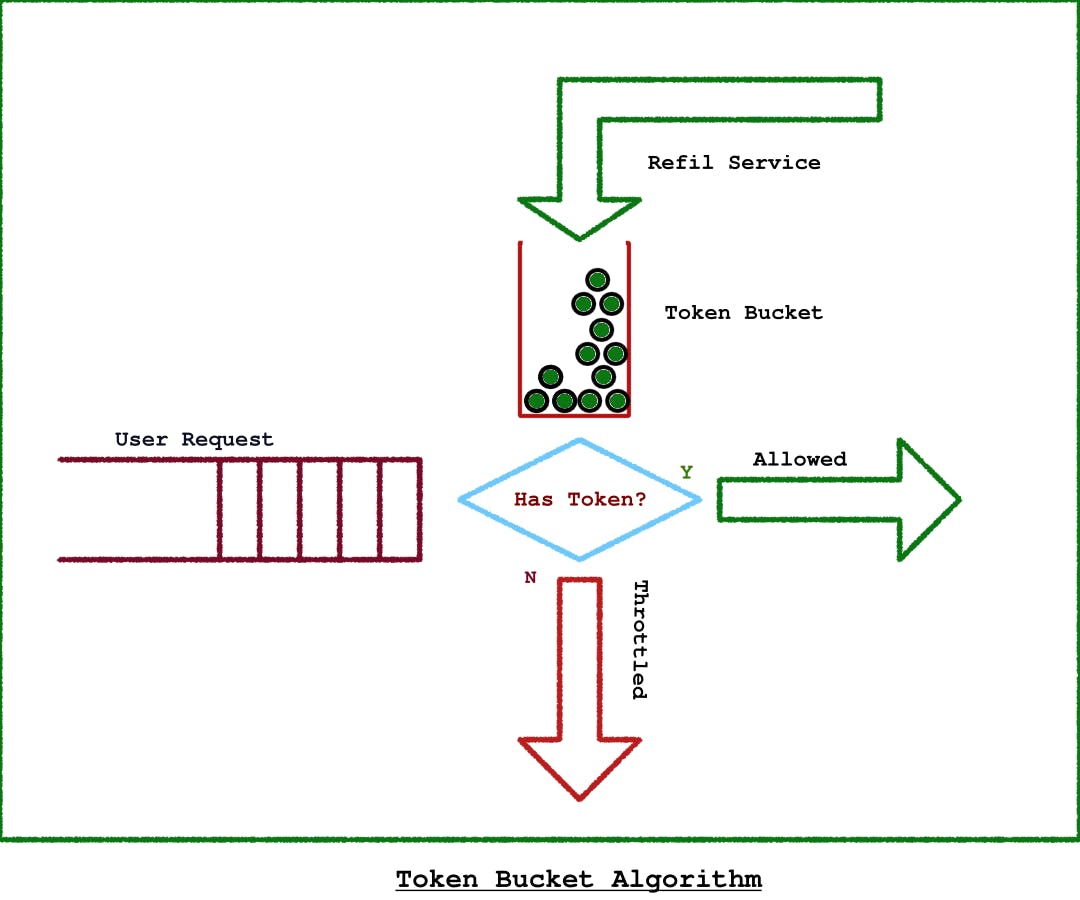

In this article, our primary focus will be on the Token Bucket algorithm. In essence, each user or IP is associated with a 'token bucket' filled with a predetermined number of tokens. Each API call consumes one token from the bucket. When the tokens are depleted, further requests are throttled until the bucket is refilled. Periodically, a service runs at predetermined intervals to replenish the tokens in the bucket. This process ensures that users have a fresh set of tokens available at the end of each interval, allowing for continued API usage.

Challenges

This algorithm mainly has 2 challenges.

A separate service needs to run on the system which could refill the token after a fixed interval. This will be an additional cost on the system.

Concurrency: If multiple requests are received from the same user/IP, there can be race condition.

Solution

To reduce the cost of the extra service, we can use something called lazy refilling. We will completely take off the extra service part and refill the respective bucket only if a particular user tries to access a resource. We will use a TTL index in Redis to keep track of time. Here is a pseudo-code, which will actually make things clearer.

//pseudocode for handling lazy loading of refill

if(user.hasToken) {

user.token--;

return true;

} else {

if(refil key is present) {

return false;

} else {

user.token = tokenLimit-1;

user.refillKey = true;

user.refillKey.setTTL(intervalLimit);

return true;

}

}

In addressing the concurrency issue, we have encapsulated the entire code responsible for token generation and updation within a Lua script. The execution of Lua scripts in Redis is atomic, ensuring that the values are consistently read and modified without the risk of race conditions. Importantly, we refrain from isolating read operations from the Lua script, as every read operation is coupled with a mandatory update. This approach further guarantees the integrity of the token-related operations in a concurrent environment.

Conclusion

In this article we have just looked into the theory part of the rate limiter. In the next article we will look into how to implement a rate limiter. We will be using nodejs and redis to do the implementation.